From Chaos to Clarity: Orchestrating the Predictive Supply Chain

Discover how predictive supply chains use AI-driven forecasting to reduce lost sales, optimize inventory, and protect margins in volatile markets.

Success starts with people. In times of overwhelming change, your teams deserve a partner that empowers them. Meet our minds, the real intelligence behind ML6 — designed to amplify yours.

Stop buying solutions, generate them, and own them. We’re building the platform that spawns agents to do work for you. From enterprise-scale systems to tailored industry and departmental applications, we help you transform the way you work today.

Experience the power of AI for your business through our client cases & testimonials. These stories aren’t just case studies; they’re proof that bold ideas, the right people, and a little AI magic can change the game.

This is where breakthrough ideas emerge and your inner innovator is awakened. Get inspired by the best of ML6's insights and the minds shaping the future of AI.

Success starts with people. In times of overwhelming change, your teams deserve a partner that empowers them. Meet our minds, the real intelligence behind ML6 — designed to amplify yours.

Stop buying solutions, generate them, and own them. We’re building the platform that spawns agents to do work for you. From enterprise-scale systems to tailored industry and departmental applications, we help you transform the way you work today.

Our engineers partner with the world’s leading technology providers to deliver robust, scalable & sovereign solutions.

Experience the power of AI for your business through our client cases & testimonials. These stories aren’t just case studies; they’re proof that bold ideas, the right people, and a little AI magic can change the game.

This is where breakthrough ideas emerge and your inner innovator is awakened. Get inspired by the best of ML6's insights and the minds shaping the future of AI.

For technology leaders like you, the challenge is no longer if AI can create value—it’s how to make it enterprise-ready, scalable, and future-proof. That’s where ML6 comes in. We combine technical excellence with scalable engineering to solve your toughest challenges and build your boldest ideas.

Our primary aim is to ensure our AI solutions drive tangible value and actual utilization within your core business activities.

We combine deep technical expertise with pragmatic delivery to design, deploy, and run secure AI solutions that accelerate your strategy, unlock your data, and deliver business impact.

AI Business Advisory: We help you turn your AI vision into impact— pinpointing high-impact use cases and guiding people, governance, and architecture to accelerate execution.

AI Technical Advisory - We help your teams make the right AI, data, and cloud decisions—building robust, future-proof solutions that deliver value from day one.

Here, we achieve full alignment on the approach and performance metrics, conducting functional analysis, setting up the initial solution, and preparing and analyzing validation data.

AI lives or dies by data—so we start there. From there, we design and optimize systems you can rely on for business-critical performance. We focus continuously on improving performance. We ensure your AI integrates seamlessly into existing systems—strengthening rather than disrupting operations. All this with enterprise-grade security, built in from day one.

Source internal and external datasets with a focus on usability and relevance. Where needed, we can create synthetic data.

Label data efficiently with a mix of automation, tooling, and managed services.

Apply anonymization and pseudonymization to ensure compliance and enable safe cloud processing.

Implement rigorous data quality management and augmentation techniques to strengthen training sets.

Set up cloud-native data warehouses on Azure, AWS and Google Cloud Platform that serve as scalable, AI-ready backbones.

Develop and fine-tune bespoke ML algorithms tailored to your context.

Apply optimization techniques to reduce inference costs and latency.

We build explainable AI frameworks to address the “black box” problem, which is crucial for adoption and compliance.

Embed ethical AI practices such as bias detection, mitigation, and human-in-the-loop review processes.

Deploy solutions optimized for performance—whether on cloud, on-prem, or at the edge.

We establish and maintain the robust infrastructure necessary for your solution's reliability and availability.

When needed, we integrate edge devices, sensors, IoT solutions, and robotics into your AI workflows.

We choose hybrid and multi-cloud architectures that balance flexibility with governance.

Connect seamlessly to APIs and legacy platforms.

Build robust edge integrations between models, devices, and machinery.

Enable interoperability with enterprise IT and OT environments.

Implement multi-cloud security strategies to safeguard sensitive workloads.

Apply customer-managed encryption to keep control in your hands.

Ensure IT/OT security for industrial environments, closing the gap between operations and IT governance.

Once an architecture is built, the real challenge begins — getting it into production at scale. We ensure that our AI doesn’t stall at the proof-of-concept stage.

We implement Continuous Integration (CI) and Continuous Delivery/Deployment (CD) pipelines, retraining loops, monitoring, and governance frameworks that make AI production-ready and sustainable.

A crucial stage for formal validation, encompassing end-to-end integration and user testing before going live.

This final phase involves the gradual deployment to all users, providing essential go-live support, setting up knowledge bases, and continuous monitoring to ensure the solution runs smoothly in production.

We don’t build black boxes. Our teams create robust documentation and co-develop with your engineers, ensuring your organization can operate and extend the solution independently if required.

Adoption is as critical as accuracy. We prepare your users and teams with the right training, tools, and organizational support to effectively integrate AI into their daily workflows.

We migrate and scale solutions across environments—cloud, hybrid, or on-prem—ensuring seamless integration with your existing IT landscape.

AI isn’t a one-off project—it’s a living system that requires monitoring, optimization, and support.

With us, there’s no "that’s not part of the product vision” and no awkward “we don’t integrate with that.” Our AI is built the way your company actually runs, not the way a product manager thinks it should. Every solution is tailor-made to fit your strategy and company like a glove—no compromises, no roadblocks, no missed opportunities. Just the exact intelligence your organisation needs to move faster, smarter, and stronger.

When the margin for error is small, you need a partner with the correct depth, experience, and mindset.

From BASF and Randstad to Sappi and Walmart, global enterprises rely on ML6 to deliver AI that works—technically and strategically. We have 13 years of AI native experience and have deployed over 450 complex use cases. Our customers give us an average 9/10 NPS score. Many have done several projects with us.

We’re the ones who do, not just talk. You benefit from top-tier AI engineering rooted in deep technical knowledge that is 3–5 years ahead of the market. For example, we launched our first GenAI solution in 2019 and continue to lead in complex deployments. We go beyond the hype to deliver future-proof solutions aligned with your business DNA.

Over 90% of our team holds a Master’s degree or a PhD. They also allocate 17% of their time to testing emerging technologies. We’re advanced partners across all major cloud platforms, having direct access to engineers at AWS, Azure, Google Cloud Platform, and OpenAI—bringing the latest tech to your business, responsibly.

We work side-by-side with your team to align business and tech, focusing on the few initiatives that truly move the needle. Our agile team model combines a stable core with just-in-time expertise.

Stay in the loop with stories from our team, success cases from our clients, and news on what we’re building behind the scenes. Whether it's a deep dive into the latest in AI, a behind-the-scenes look at a real-world implementation, or product updates we’re proud of — here’s what’s been happening lately.

Discover how predictive supply chains use AI-driven forecasting to reduce lost sales, optimize inventory, and protect margins in volatile markets.

Discover the MLOps architecture behind ML6’s system imbalance forecaster. Go beyond the notebook with a deep dive into cloud-native data pipelines, automated training factories, and high-availability inference engines designed for real-time energy grid operations.

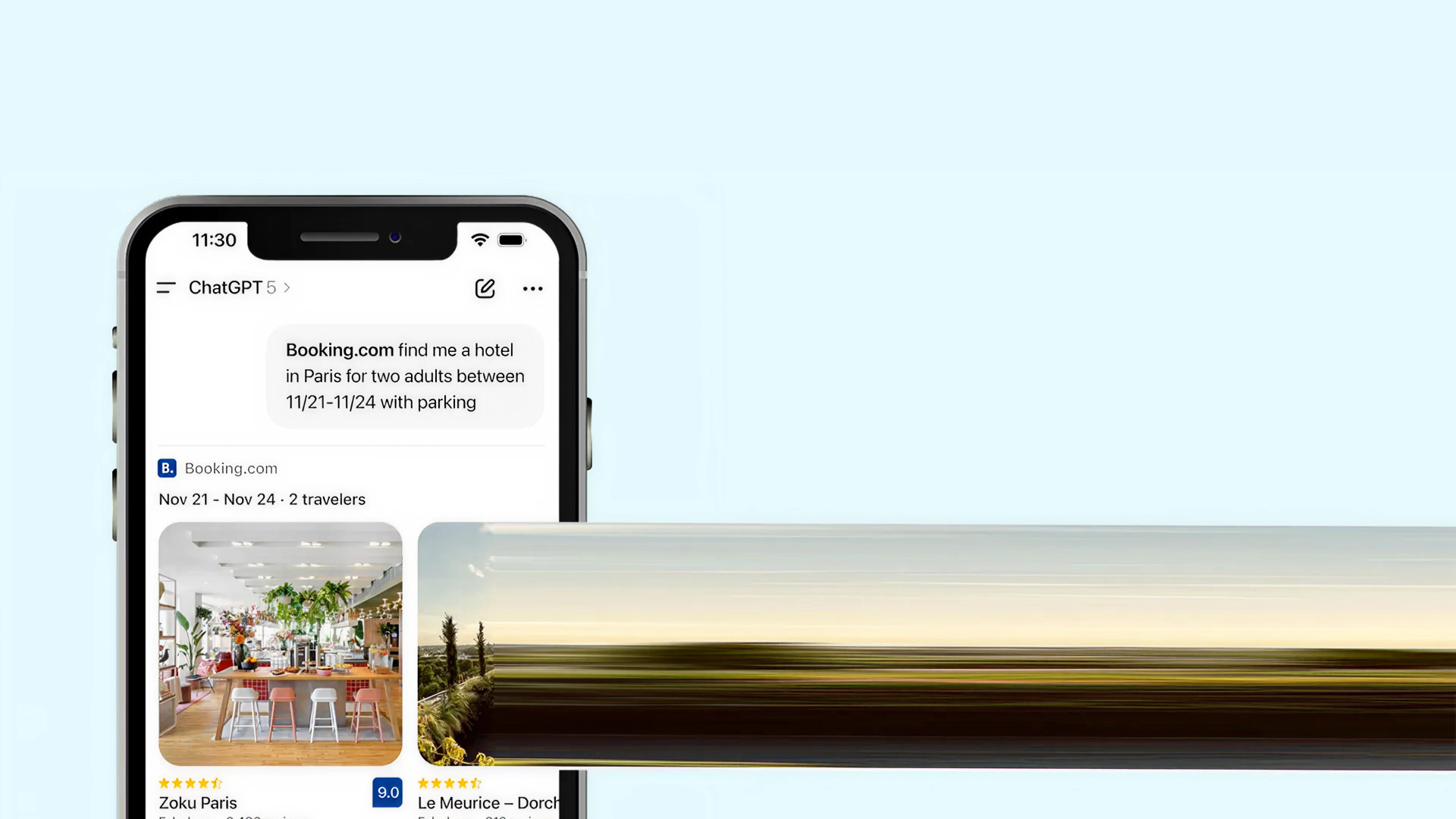

Explore how ChatGPT apps transform customer journeys by moving discovery, decision-making, and action into conversational AI interfaces.

At ML6, we’ve partnered with the world’s most ambitious enterprises to build their AI vision together. Let's use AI to turn your business strategy into operational impact today.