April 14, 2021

Why and how to do an AI Ethical Risk Assessment?

Artificial Intelligence has gained significant traction in recent years, and we strongly believe that AI can do a lot of good. However, we recognize that along with these developments there are growing concerns about the use of AI and its impact on society. In this blog post we explain what Ethical and Trustworthy AI is and how we at ML6 help our clients unlock the full value of AI and build trust with their customers, employees and society as a whole.

A brief intro to Trustworthy and Ethical AI

At ML6, we firmly believe that AI has the potential to do a lot of good, from creating business value to increasing economic growth, and even helping to tackle major societal challenges such as climate change, mobility and health. However, this potential can only be reached if customers, employees and society trust the AI solutions that are being developed. Here comes the case for Trustworthy AI - because only by aligning the design and the application of AI with ethical and legal values, and transparently communicating about them, we can foster trust and adoption of AI technology.

Let’s take a step back first.

What do we mean when talking about Trustworthy AI? Trustworthy AI has 3 components: Lawful AI, Ethical AI and Robust AI.

With lawful AI things are quite clear - all development of AI has to comply with applicable laws and regulations, for example GDPR. With the other two components, things get a bit more tricky, as it is challenging to explicitly define actionable rules and a common understanding.

Why is it so challenging? Well, ethics of a technology start with the ethical values of its creators, so the developers and anyone else involved in the process of creating an AI solution. However, ethics are inherently personal, there is no ‘right answer’. Everyone of us has different principles, often depending on the context and culture we grew up in.

So what do we need to consider when developing Trustworthy AI?

There are certain risks involved when building AI solutions, which need to be taken into account and mitigated proactively. The EU defines those risks along 7 dimensions:

- Human agency and oversight

- Technical robustness and safety

- Privacy and data governance

- Transparency

- Diversity, non-discrimination and fairness

- Societal and environmental wellbeing

- Accountability

Let’s look at an example to make this more tangible. Think of an AI solution that predicts the most suitable job applicants for an open position. Feeding this solution with data from the past, the algorithm might learn that male applicants have been considered more often for e.g. an engineering position, and thus ‘learns’ that males are better suited for this position. Here, training on biased historical data introduces a bias in our predictions. Such a risk needs to be identified and corrected for.

The ML6 AI Ethical Risk Assessment - what is it?

Recently, we have seen an increase of interest in the topic of Ethical AI, and companies asking us to help them navigate through this complex environment, especially in high-risk industries such as HR, public sector and healthcare. Therefore we decided to open up our internal framework used to assess the ethical risks of our AI projects to our clients - in the form of the AI Ethical Risk Assessment.

What is the AI Ethical Risk Assessment offering? It is ML6’s approach to understanding and evaluating the risks associated with a specific AI solution/use case. ML6 has developed a framework based on the EU Ethics guidelines for Trustworthy AI, aiming to enable the assessment of projects or ideas on a more practical level.

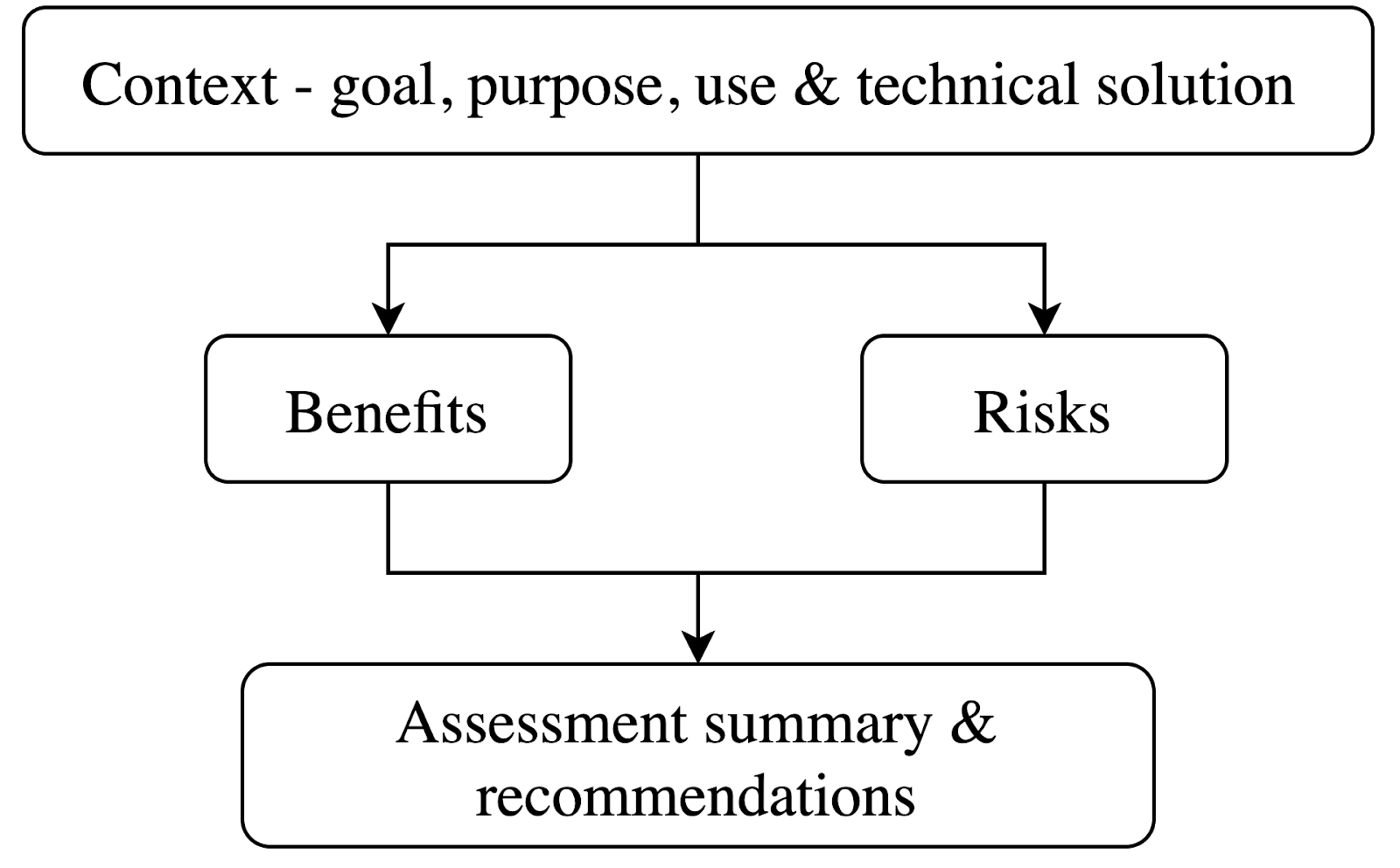

During the short engagement, our ethical AI experts work towards a report highlighting benefits and risks of a specific AI use case and recommend potential actions to mitigate or limit these risks. In interviews with business and technical experts from the client side, we will get a clear picture of the goal and purpose, the technology and the intended use of the application.

We then look on the one hand at the benefits of the solution for individuals, the company and society, and on the other hand at the ethical risks in the 7 risk dimensions. For each dimension, we have developed a set of specific questions to help guide the thought process. Scoring each of these dimensions on a scale from 0 to 5, we can highlight the high-risk dimensions for a certain project in an executive summary and suggest mitigating actions.

It is important to note that we always have to take the context of a solution into account. An AI application matching job candidates to potential jobs will likely carry higher risks than a solution to detect defects on the manufacturing line.

Why and when should you care?

Now, when should you consider an AI Ethical Risk Assessment?

We currently see three potential scenarios for the need of such an assessment:

- Do you have an idea for a new product or service that involves AI? Are you not yet sure of the risks of this AI project and what to pay extra attention to during development? Embed a risk assessment at the start of your project and incorporate considerations and mitigating actions from the get go.

- Did you already start building a solution, maybe getting close to deploying it for broader use, and want to build trust in your solution? Show your customers as well as your employees that you are considering ethical implications and proactively address risks.

- Have you already deployed your AI solution, but some specific concerns have been raised by customers or employees? Let ML6 conduct an independent review and create the necessary documentation to help mitigate concerns and suggest ways to address risk areas.

With our Ethics Advisory offering we want to support you to develop trustworthy solutions, create trust in AI with your customers and employees, and help limit your exposure to risk. Equipped with this framework, you will also be able to assess future use cases yourself.

Did we spark your interest? Reach out to us if you want to know more or would like us to support you in assessing the Ethical risks of your AI application!

No items found.