Letter from the CEO — How we went from Big Data to Superintelligence

Explore the journey from Big Data to Superintelligence and how ML6 is pioneering the future of AI, delivering impactful solutions, and transforming businesses.

Success starts with people. In times of overwhelming change, your teams deserve a partner that empowers them. Meet our minds, the real intelligence behind ML6 — designed to amplify yours.

Stop buying solutions, generate them, and own them. We’re building the platform that spawns agents to do work for you. From enterprise-scale systems to tailored industry and departmental applications, we help you transform the way you work today.

Experience the power of AI for your business through our client cases & testimonials. These stories aren’t just case studies; they’re proof that bold ideas, the right people, and a little AI magic can change the game.

This is where breakthrough ideas emerge and your inner innovator is awakened. Get inspired by the best of ML6's insights and the minds shaping the future of AI.

Success starts with people. In times of overwhelming change, your teams deserve a partner that empowers them. Meet our minds, the real intelligence behind ML6 — designed to amplify yours.

Stop buying solutions, generate them, and own them. We’re building the platform that spawns agents to do work for you. From enterprise-scale systems to tailored industry and departmental applications, we help you transform the way you work today.

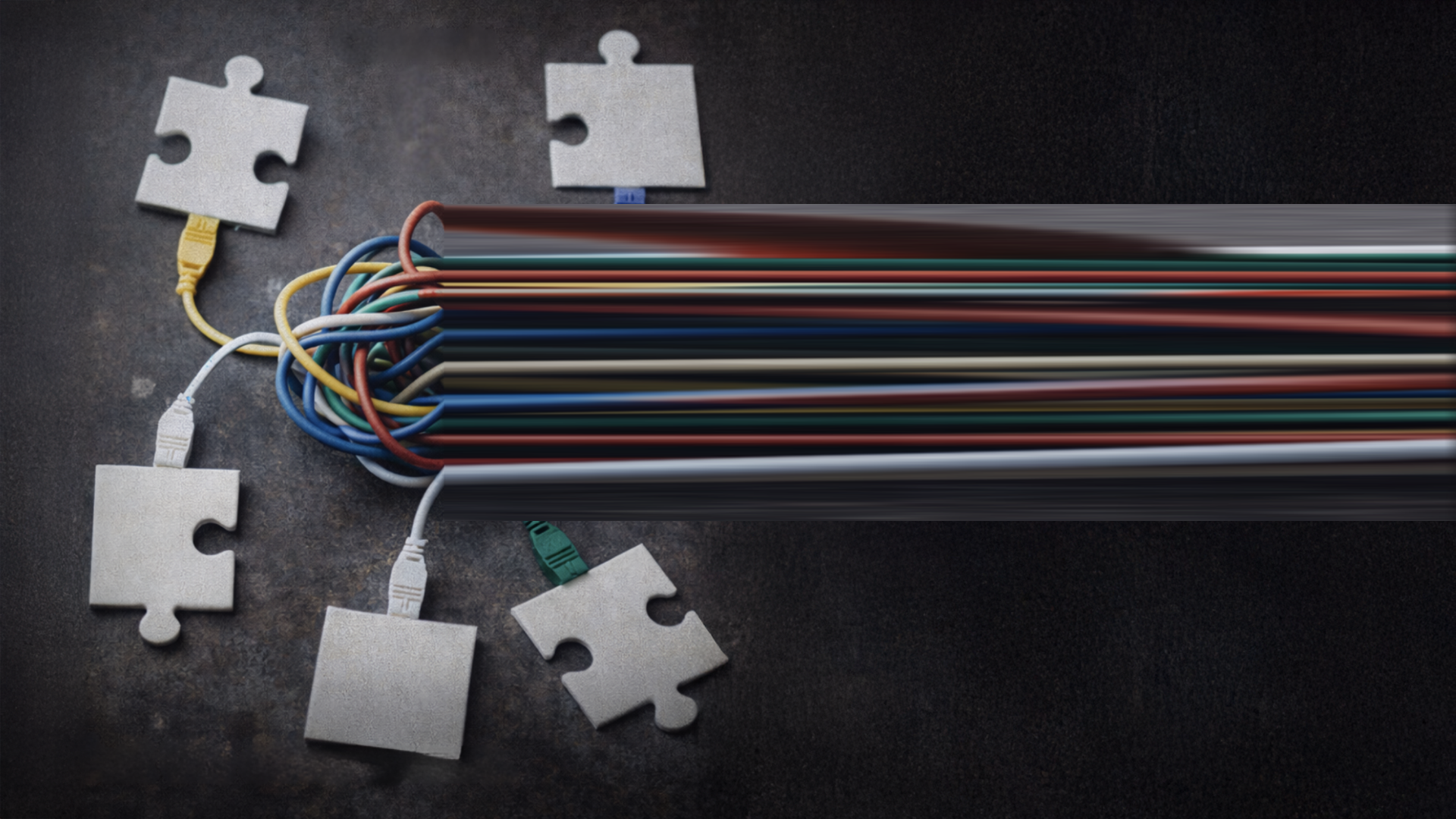

Our engineers partner with the world’s leading technology providers to deliver robust, scalable & sovereign solutions.

Experience the power of AI for your business through our client cases & testimonials. These stories aren’t just case studies; they’re proof that bold ideas, the right people, and a little AI magic can change the game.

This is where breakthrough ideas emerge and your inner innovator is awakened. Get inspired by the best of ML6's insights and the minds shaping the future of AI.

This is where breakthrough ideas emerge and your inner innovator is awakened. Get inspired by the best of ML6's insights and the minds shaping the future of AI.

Explore the journey from Big Data to Superintelligence and how ML6 is pioneering the future of AI, delivering impactful solutions, and transforming businesses.

AI agents powered by Deep Research and RAG analyzed the Olof Palme investigation, navigating thousands of digitized police files in just four days.

Discover how predictive supply chains use AI-driven forecasting to reduce lost sales, optimize inventory, and protect margins in volatile markets.

Discover the MLOps architecture behind ML6’s system imbalance forecaster. Go beyond the notebook with a deep dive into cloud-native data pipelines, automated training factories, and high-availability inference engines designed for real-time energy grid operations.

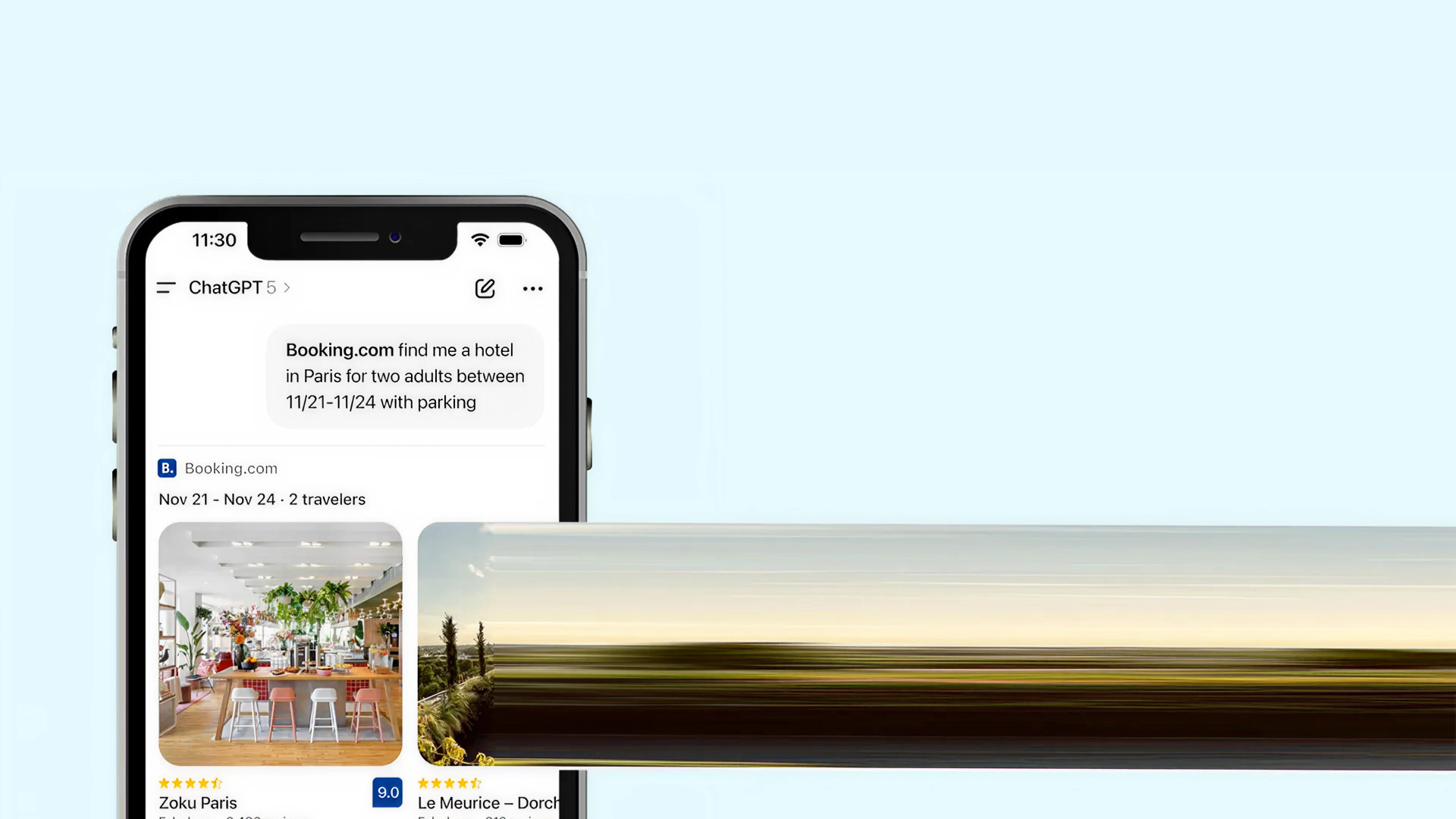

Explore how ChatGPT apps transform customer journeys by moving discovery, decision-making, and action into conversational AI interfaces.

How AI agents transform telecom customer experience by connecting fragmented data, improving customer support, and boosting operational efficiency.

Explore how a production-grade AI system forecasts real-time system imbalance in Belgium, reducing forecast error by 16% compared to TSO reference forecasts under live operational constraints.

A technical breakdown of Lovable app architecture, covering frontend and backend components, execution boundaries, and key architectural trade-offs.

Compare managed vs custom AI agent solutions in 2026. Learn when to use each, key trade-offs, and how to choose for production AI systems.

AI-native engineering boosts speed, but at a cost. Learn how teams balance AI-generated code, quality, security, and ownership without review overload.